A new technology developed by University of Texas at Austin scientists can turn a person’s thoughts into readable text.

It’s called a semantic decoder, and it uses artificial intelligence to interpret brain activity. The decoder can allow communication with someone who is mentally conscious but may be unable to speak – like someone who’s had a stroke.

Alex Huth is an assistant professor of neuroscience in computer science at UT-Austin. He worked with doctoral student Jerry Tang to develop this decoder. Huth joined Texas Standard to talk about their research, the technology and the legal and ethical concerns surrounding it. Listen to the story above or read the transcript below.

This transcript has been edited lightly for clarity:

Texas Standard: Give us an overview of this brain activity decoder you’ve created. What is it and how does it work exactly? This sounds like one of these almost science fiction devices.

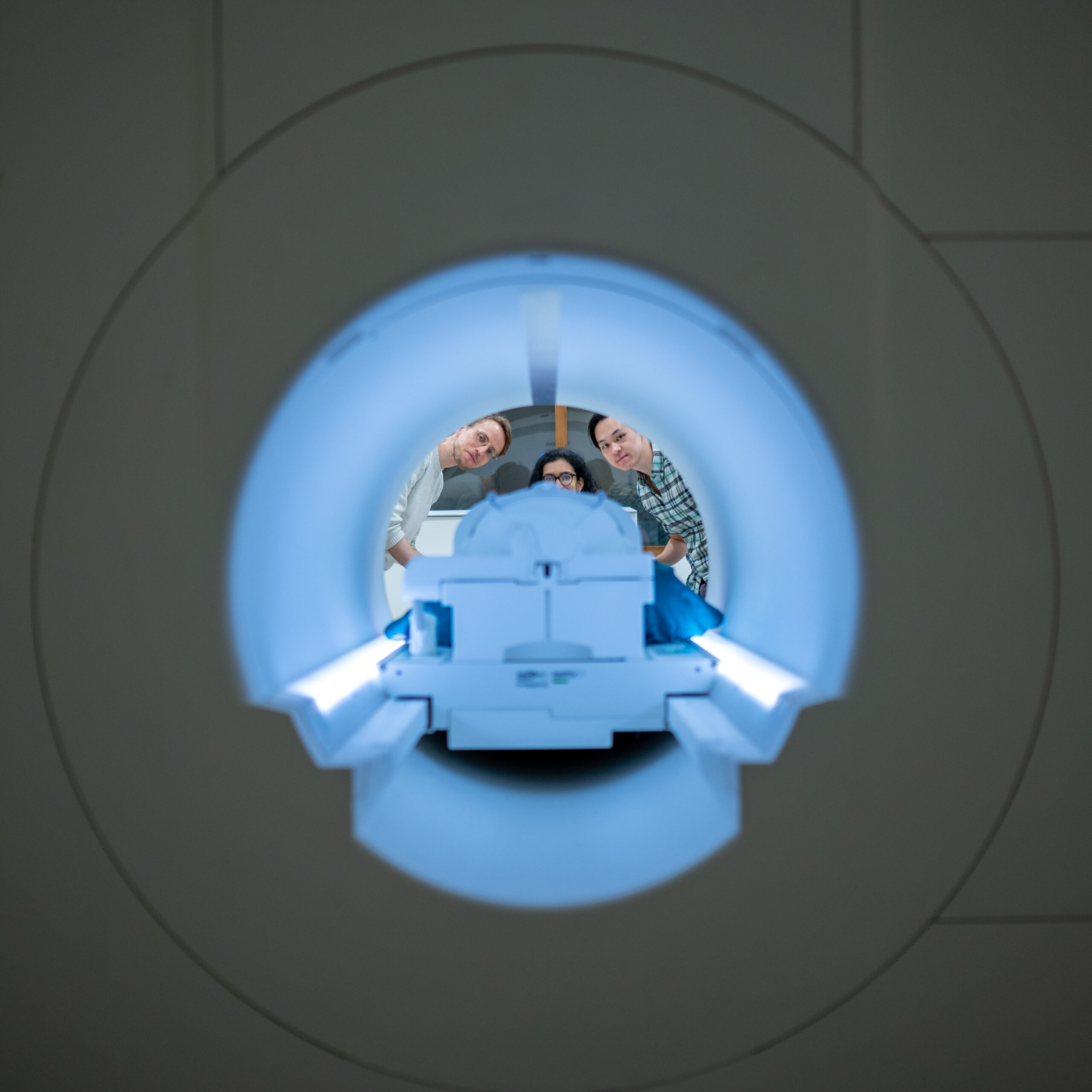

Alex Huth: Yeah, every day we’re moving a little bit of science fiction into science fact. So the way it works is we put a person in an MRI scanner, kind of like the one where you would get a medical MRI. But we’re doing functional MRI so we can record what’s happening in the brain. We build up a mapping of where different ideas, different thoughts are represented in the brain over many hours of training. And then we can use our algorithms to read those ideas out into words. So we can decode words that somebody is hearing if they’re listening to a podcast, for example, or even just thinking – if they’re telling a story inside their head.

So does the person, the subject, do they have to think particularly hard about it for it to register? How does it work?

So it seems like they have to be very intentionally thinking something. So we were concerned about kind of the mental privacy implications…

I was going to say, you’ve seen the movie “Minority Report,” right? I would think that there might be something like that.

So we’re worried about this. So we did some tests to see if the person, if they weren’t thinking thoughts very deliberately or if they were trying to think something else, could that upset the decoder? Could that make it not work? And it turns out it can. So the person has to be very deliberately, actively sort of thinking a sentence in their head, and then we can read that out. And of course, this only works on a person where we’ve trained this model with many hours of data.